IEEE websites place cookies on your device to give you the best user experience. By using our websites, you agree to the placement of these cookies. To learn more, read our Privacy Policy.

He credits IEEE with teaching him important soft skills

When Arjun Pillai was a youngster, the words entrepreneur and startup weren’t part of his vocabulary. It wasn’t until he became an IEEE student member in 2006 that Pillai learned those terms. He served on several IEEE committees and had leadership positions in IEEE groups—which helped him discover his managerial instincts. He learned from other IEEE members what running a tech company entails. And he belatedly realized that his father, who ran a small rubber-band manufacturing company next to the family’s home in India, was an entrepreneur.

After learning more about what running a tech company entails from other IEEE members, Pillai decided he also wanted to become an entrepreneur, to create “something bigger than me.”

He helped launch two companies that develop software-as-a-service platforms—both of which he and his cofounders subsequently sold.

His most recent venture, Insent, was a business-to-business chat platform based in Denver; it was acquired last year by software and data company ZoomInfo of Vancouver, Wash. Pillai is now senior vice president of ZoomInfo’s products and growth department.

He credits IEEE with giving him leadership training and opportunities to hone his public-speaking skills. As an active volunteer with the IEEE Kerala (India) Section, he spoke at numerous conferences and meetings. He also served on the IEEE Region 10humanitarian technology activities committee and was vice chair of IEEE Young Professionals.

Thos activities helped him improve his English language skills, he says, as well as his interpersonal skills.

“IEEE helped me gain the confidence I needed to found my first startup, in 2012,” the senior member says.

After graduating in 2010 with a bachelor’s degree in electronics and communication engineering from the Cochin University of Science and Technology in India, Pillai joined IT company Infosys, in Bangalore, as a systems engineer. He left in 2012 and founded Profoundis with three former classmates: Jofin Joseph, Nithin Sam Oommen, and Anoop Thomas Mathew. The company, based in Cochin, aimed to help businesses and other organizations connect with customers.

“I was 23 years old. I was young and stupid and had no idea what I was getting into,” Pillai says, laughing. He served as chief executive, while Joseph was chief operating officer, Oommen was chief of data research, and Mathew was chief technology officer.

During the company’s first two years, it developed four products—a management system for online testimonials, a financial analytics platform for small and midsize businesses, an assignment system for college students, and a social media analytics tool—all of which failed to get any traction within their intended markets, Pillai says.

“If the reason why you started a company is strong, it will get you through the hurdles you will face.”

“But we had to just keep going,” he says. “The failure didn’t really register as such. It was just another day at work.”

In 2015 Profoundis launched its fifth product, Vibe: sales software that helps businesses identify prospective customers. It proved to be a winner. Vibe collects data about companies from the Internet, organizes it into a searchable list, and makes it available to marketers and salespeople. More than 200 companies in dozens of countries have used it. The success of the product enabled the company to grow from seven employees to 72. FullContact, a software company in Denver, acquired Profoundis nine months later.

“It was the first product acquisition in the history of my state of Kerala,” Pillai says.

After Profoundis was sold, Pillai joined FullContact as the head of data strategy, working in Denver. But he says he didn’t really enjoy working for another company.

“Once you’re an entrepreneur, you kind of have that bug all the time,” he says. He also struggled with the fact that it wasn’t his company anymore, he says.

“I didn’t do a good enough job transitioning from CEO to employee,” he says. “I always tell other entrepreneurs about my experience so they don’t make the same mistake. This was the most important thing I learned after selling my first company.”

Pillai left FullContact after two years and worked as a consultant until 2018, when he founded Insent with IEEE Member Prasanna Venkatesan. The two cofounders had met through their volunteer activities with IEEE a decade earlier.

The startup’s B2B platform used a combination of human and chatbot conversations to personalize a customer’s purchasing experience with a retailer. After the company was acquired, the platform was renamed ZoomInfo Chat.

ZoomInfo hired all of the startup’s employees.

In his new position, Pillai led the release of ZoomInfo Chat. He is currently managing the company’s data portfolio.

“ZoomInfo has the best business data assets in the world,” he says. “It’s the core of the company, so I have a big responsibility.”

Pillai also invests in startups including Inflection, Savant Labs, and Toplyne. Inflection and Savant Labs are data analytics companies, while Toplyne develops software.

“Investing in others gives me the ability to learn about new industries,” he says.

Pillai says that running his own company gave him an unparalleled education on how to be a CEO, and he has learned from both his successes and his failures.

Pillai, who had no mentor to teach him how to run a company, is now a strong advocate for supporting budding entrepreneurs. For the past five years, he has been an ambassador for Start-Up Chile, a government initiative that seeks to attract high-potential entrepreneurs to the country.

“I want to give back,” he says.

The biggest challenge for any startup, he says, is bringing together the right team, especially if you plan to launch the company with another person.

“Being cofounders is like being married,” he says. “If you choose the wrong person, running a successful startup together will be difficult.”

You need to pick people who share your passion and have a similar mindset, a positive attitude, and strong ethics, he says.

Other challenges include developing a product or service that makes sense for its intended market and securing enough funding so that you can release the product in a timely way while it still can make an impact.

He tells budding entrepreneurs to ensure they’re forming a company for the right reasons. Found a startup only if “you feel passionate about a problem that keeps you awake at night and that you believe you’re the only person who can solve,” he says. “If the reason why you started a company is strong, it will get you through the hurdles you will face.”

Pillai advises startup founders to take occasional breaks from work.

“In the four and a half years I was CEO of my first company, work was my life,” he says. He was so driven that he showed up once to an investor’s meeting while he was sick, instead of rescheduling it. He says he thinks that’s the reason the investor ended up backing away from the project.

Pillai says he wishes he had had a mentor to tell him that he needed to take it easy, and that things would work out in the end.

“Learning all of these lessons on my own was a humbling experience,” he says.

Joanna Goodrich is the assistant editor of The Institute, covering the work and accomplishments of IEEE members and IEEE and technology-related events. She has a master's degree in health communications from Rutgers University, in New Brunswick, N.J.

Peer-to-peer file sharing would make the Internet far more efficient

When the COVID-19 pandemic erupted in early 2020, the world made an unprecedented shift to remote work. As a precaution, some Internet providers scaled back service levels temporarily, although that probably wasn’t necessary for countries in Asia, Europe, and North America, which were generally able to cope with the surge in demand caused by people teleworking (and binge-watching Netflix). That’s because most of their networks were overprovisioned, with more capacity than they usually need. But in countries without the same level of investment in network infrastructure, the picture was less rosy: Internet service providers (ISPs) in South Africa and Venezuela, for instance, reported significant strain.

But is overprovisioning the only way to ensure resilience? We don’t think so. To understand the alternative approach we’re championing, though, you first need to recall how the Internet works.

The core protocol of the Internet, aptly named the Internet Protocol (IP), defines an addressing scheme that computers use to communicate with one another. This scheme assigns addresses to specific devices—people’s computers as well as servers—and uses those addresses to send data between them as needed.

It’s a model that works well for sending unique information from one point to another, say, your bank statement or a letter from a loved one. This approach made sense when the Internet was used mainly to deliver different content to different people. But this design is not well suited for the mass consumption of static content, such as movies or TV shows.

The reality today is that the Internet is more often used to send exactly the same thing to many people, and it’s doing a huge amount of that now, much of which is in the form of video. The demands grow even higher as our screens obtain ever-increasing resolutions, with 4K video already in widespread use and 8K on the horizon.

The content delivery networks (CDNs) used by streaming services such as Netflix help address the problem by temporarily storing content close to, or even inside, many ISPs. But this strategy relies on ISPs and CDNs being able to make deals and deploy the required infrastructure. And it can still leave the edges of the network having to handle more traffic than actually needs to flow.

The real problem is not so much the volume of content being passed around—it’s how it is being delivered, from a central source to many different far-away users, even when those users are located right next to one another.

A more efficient distribution scheme in that case would be for the data to be served to your device from your neighbor’s device in a direct peer-to-peer manner. But how would your device even know whom to ask? Welcome to the InterPlanetary File System (IPFS).

The InterPlanetary File System gets its name because, in theory, it could be extended to share data even between computers on different planets of the solar system. For now, though, we’re focused on rolling it out for just Earth!

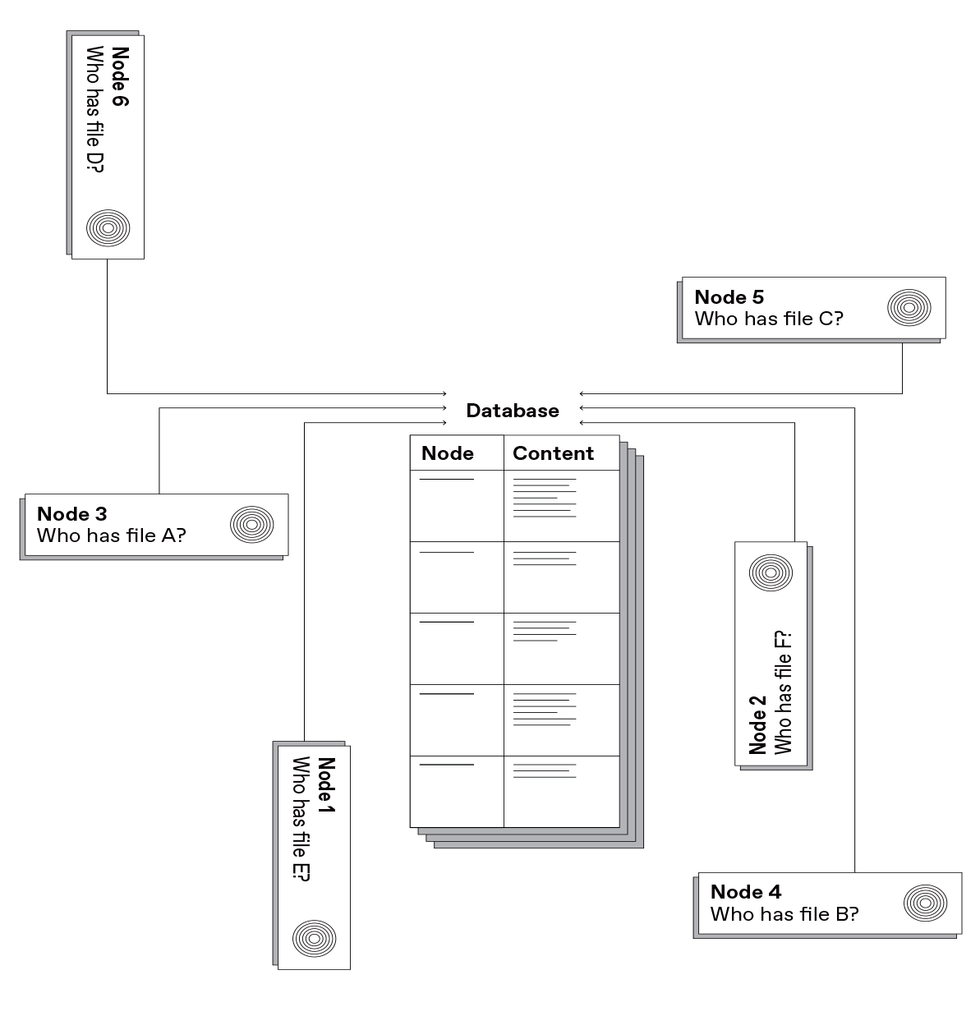

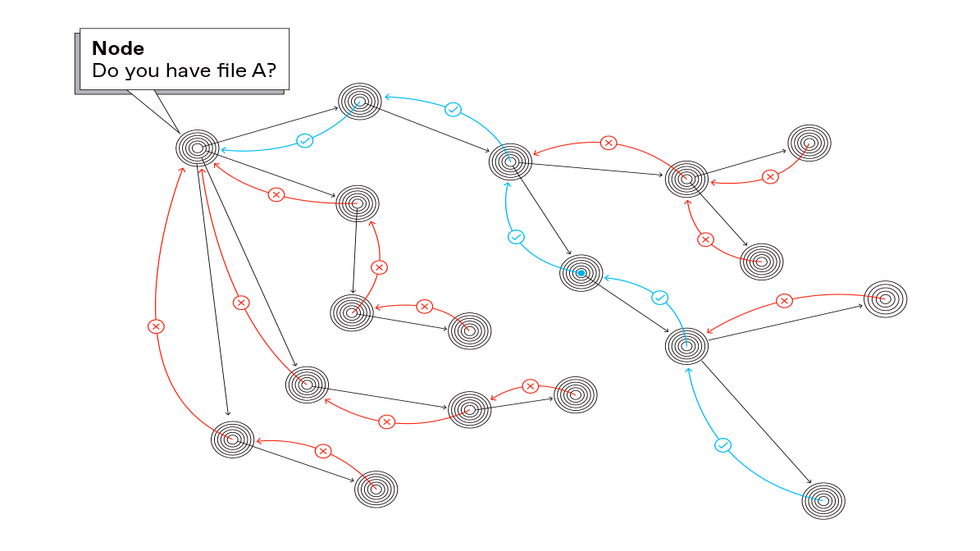

The key to IPFS is what’s called content addressing. Instead of asking a particular provider, “Please send me this file,” your machine asks the network, “Who can send me this file?” It starts by querying peers: other computers in the user’s vicinity, others in the same house or office, others in the same neighborhood, others in the same city—expanding progressively outward to globally distant locations, if need be, until the system finds a copy of what you’re looking for.

These queries are made using IPFS, an alternative to the Hypertext Transfer Protocol (HTTP), which powers the World Wide Web. Building on the principles of peer-to-peer networking and content-based addressing, IPFS allows for a decentralized and distributed network for data storage and delivery.

The benefits of IPFS include faster and more-efficient distribution of content. But they don’t stop there. IPFS can also improve security with content-integrity checking so that data cannot be tampered with by intermediary actors. And with IPFS, the network can continue operating even if the connection to the originating server is cut or if the service that initially provided the content is experiencing an outage—particularly important in places with networks that work only intermittently. IPFS also offers resistance to censorship.

To understand more fully how IPFS differs from most of what takes place online today, let’s take a quick look at the Internet’s architecture and some earlier peer-to-peer approaches.

As mentioned above, with today’s Internet architecture, you request content based on a server’s address. This comes from the protocol that underlies the Internet and governs how data flows from point to point, a scheme first described by Vint Cerf and Bob Kahn in a 1974 paper in the IEEE Transactions on Communications and now known as the Internet Protocol. The World Wide Web is built on top of the Internet Protocol. Browsing the Web consists of asking a specific machine, identified by an IP address, for a given piece of data.

Instead of asking a particular provider, “Please send me this file,” your machine asks the network, “Who can send me this file?”

The process starts when a user types a URL into the address bar of the browser, which takes the hostname portion and sends it to a Domain Name System (DNS) server. That DNS server returns a corresponding numerical IP address. The user’s browser will then connect to the IP address and ask for the Web page located at that URL.

In other words, even if a computer in the same building has a copy of the desired data, it will neither see the request, nor would it be able to match it to the copy it holds because the content does not have an intrinsic identifier—it is not content-addressed.

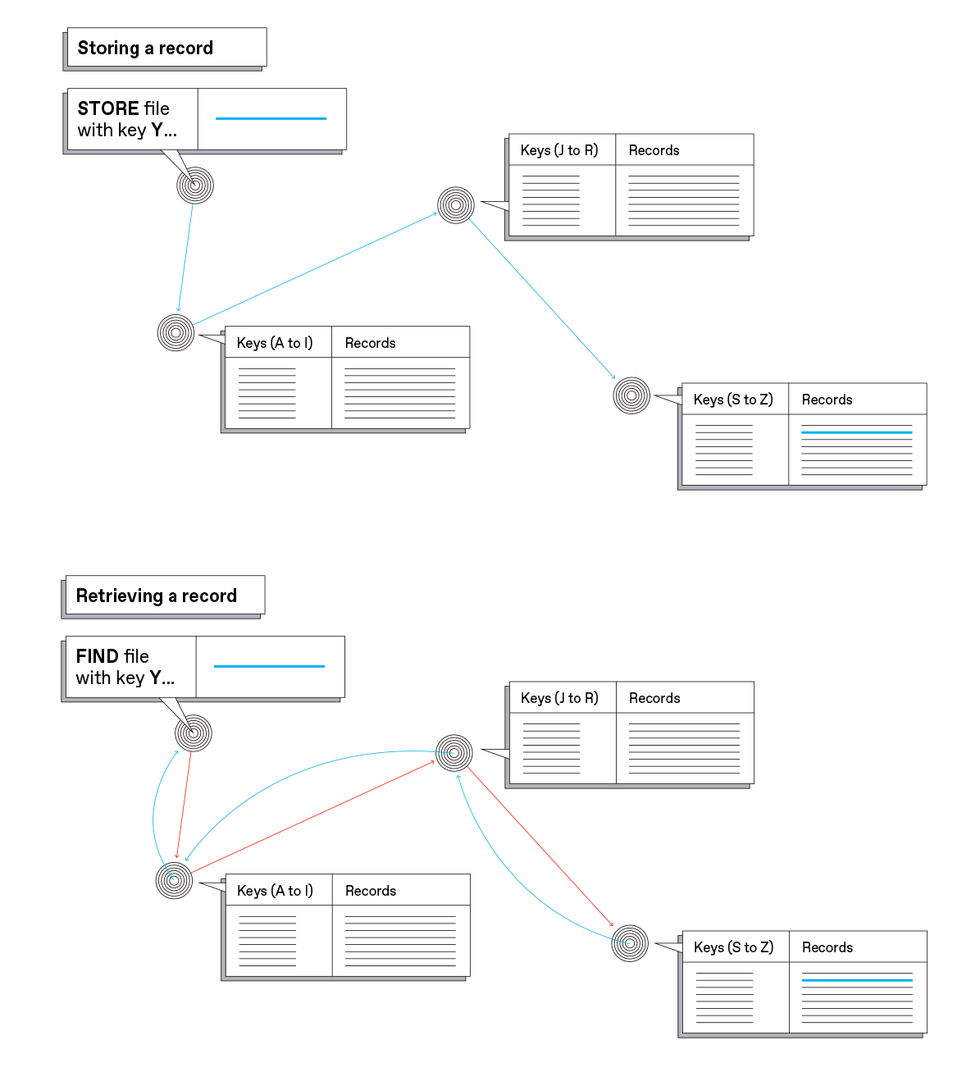

A content-addressing model for the Internet would give data, not devices, the leading role. Requesters would ask for the content explicitly, using a unique identifier (akin to the DOI number of a journal article or the ISBN of a book), and the Internet would handle forwarding the request to an available peer that has a copy.

The major challenge in doing so is that it would require changes to the core Internet infrastructure, which is owned and operated by thousands of ISPs worldwide, with no central authority able to control what they all do. While this distributed architecture is one of the Internet’s greatest strengths, it makes it nearly impossible to make fundamental changes to the system, which would then break things for many of the people using it. It’s often very hard even to implement incremental improvements. A good example of the difficulty encountered when introducing change is IPv6, which expands the number of possible IP addresses. Today, almost 25 years after its introduction, it still hasn’t reached 50 percent adoption.

A way around this inertia is to implement changes at a higher layer of abstraction, on top of existing Internet protocols, requiring no modification to the underlying networking software stacks or intermediate devices.

Other peer-to-peer systems besides IPFS, such as BitTorrent and Freenet, have tried to do this by introducing systems that can operate in parallel with the World Wide Web, albeit often with Web interfaces. For example, you can click on a Web link for the BitTorrent tracker associated with a file, but this process typically requires that the tracker data be passed off to a separate application from your Web browser to handle the transfers. And if you can’t find a tracker link, you can’t find the data.

Freenet also uses a distributed peer-to-peer system to store content, which can be requested via an identifier and can even be accessed using the Web’s HTTP protocol. But Freenet and IPFS have different aims: Freenet has a strong focus on anonymity and manages the replication of data in ways that serve that goal but lessen performance and user control. IPFS provides flexible, high-performance sharing and retrieval mechanisms but keeps control over data in the hands of the users.

We designed IPFS as a protocol to upgrade the Web and not to create an alternative version. It is designed to make the Web better, to allow people to work offline, to make links permanent, to be faster and more secure, and to make it as easy as possible to use.

IPFS started in 2013 as an open-source project supported by Protocol Labs, where we work, and built by a vibrant community and ecosystem with hundreds of organizations and thousands of developers. IPFS is built on a strong foundation of previous work in peer-to-peer (P2P) networking and content-based addressing.

The core tenet of all P2P systems is that users simultaneously participate as clients (which request and receive files from others) and as servers (which store and send files to others). The combination of content addressing and P2P provides the right ingredients for fetching data from the closest peer that holds a copy of what’s desired—or more correctly, the closest one in terms of network topology, though not necessarily in physical distance.

To make this happen, IPFS produces a fingerprint of the content it holds (called a hash) that no other item can have. That hash can be thought of as a unique address for that piece of content. Changing a single bit in that content will yield an entirely different address. Computers wanting to fetch this piece of content broadcast a request for a file with this particular hash.

Because identifiers are unique and never change, people often refer to IPFS as the “Permanent Web.” And with identifiers that never change, the network will be able to find a specific file as long as some computer on the network stores it.

Name persistence and immutability inherently provide another significant property: verifiability. Having the content and its identifier, a user can verify that what was received is what was asked for and has not been tampered with, either in transit or by the provider. This not only improves security but also helps safeguard the public record and prevent history from being rewritten.

You might wonder what would happen with content that needs to be updated to include fresh information, such as a Web page. This is a valid concern and IPFS does have a suite of mechanisms that would point users to the most up-to-date content.

Reducing the duplication of data moving through the network and procuring it from nearby sources will let ISPs provide faster service at lower cost.

The world had a chance to observe how content addressing worked in April 2017 when the government of Turkey blocked access to Wikipedia because an article on the platform described Turkey as a state that sponsored terrorism. Within a week, a full copy of the Turkish version of Wikipedia was added to IPFS, and it remained accessible to people in the country for the nearly three years that the ban continued.

A similar demonstration took place half a year later, when the Spanish government tried to suppress an independence referendum in Catalonia, ordering ISPs to block related websites. Once again, the information remained available via IPFS.

IPFS is an open, permissionless network: Any user can join and fetch or provide content. Despite numerous open-source success stories, the current Internet is heavily based on closed platforms, many of which adopt lock-in tactics but also offer users great convenience. While IPFS can provide improved efficiency, privacy, and security, giving this decentralized platform the level of usability that people are accustomed to remains a challenge.

You see, the peer-to-peer, unstructured nature of IPFS is both a strength and a weakness. While CDNs have built sprawling infrastructure and advanced techniques to provide high-quality service, IPFS nodes are operated by end users. The network therefore relies on their behavior—how long their computers are online, how good their connectivity is, and what data they decide to cache. And often those things are not optimal.

One of the key research questions for the folks working at Protocol Labs is how to keep the IPFS network resilient despite shortcomings in the nodes that make it up—or even when those nodes exhibit selfish or malicious behavior. We’ll need to overcome such issues if we’re to keep the performance of IPFS competitive with conventional distribution channels.

You may have noticed that we haven’t yet provided an example of an IPFS address. That’s because hash-based addressing results in URLs that aren’t easy to spell out or type.

For instance, you can find the Wikipedia logo on IPFS by using the following address in a suitable browser: ipfs://QmRW3V9znzFW9M5FYbitSEvd5dQrPWGvPvgQD6LM22Tv8D/. That long string can be thought of as a digital fingerprint for the file holding that logo.

There are other content-addressing schemes that use human-readable naming, or hierarchical, URL-style naming, but each comes with its own set of trade-offs. Finding practical ways to use human-readable names with IPFS would go a long way toward improving user-friendliness. It’s a goal, but we’re not there yet.

Protocol Labs, has been tackling these and other technical, usability, and societal issues for most of the last decade. Over this time, we have been seeing rapidly increasing adoption of IPFS, with its network size doubling year over year. Scaling up at such speeds brings many challenges. But that’s par for the course when your intent is changing the Internet as we know it.

Widespread adoption of content addressing and IPFS should help the whole Internet ecosystem. By empowering users to request exact content and verify that they received it unaltered, IPFS will improve trust and security. Reducing the duplication of data moving through the network and procuring it from nearby sources will let ISPs provide faster service at lower cost. Enabling the network to continue providing service even when it becomes partitioned will make our infrastructure more resilient to natural disasters and other large-scale disruptions.

But is there a dark side to decentralization? We often hear concerns about how peer-to-peer networks may be used by bad actors to support illegal activity. These concerns are important but sometimes overstated.

One area where IPFS improves on HTTP is in allowing comprehensive auditing of stored data. For example, thanks to its content-addressing functionality and, in particular, to the use of unique and permanent content identifiers, IPFS makes it easier to determine whether certain content is present on the network, and which nodes are storing it. Moreover, IPFS makes it trivial for users to decide what content they distribute and what content they stop distributing (by merely deleting it from their machines).

At the same time, IPFS provides no mechanisms to allow for censorship, given that it operates as a distributed P2P file system with no central authority. So there is no actor with the technical means to prohibit the storage and propagation of a file or to delete a file from other peers’ storage. Consequently, censorship of unwanted content cannot be technically enforced, which represents a safeguard for users whose freedom of speech is under threat. Lawful requests to take down content are still possible, but they need to be addressed to the users actually storing it, avoiding commonplace abuses (like illegitimate DMCA takedown requests) against which large platforms have difficulties defending.

Ultimately, IPFS is an open network, governed by community rules, and open to everyone. And you can become a part of it today! The Brave browser ships with built-in IPFS support, as does Opera for Android. There are browser extensions available for Chrome and Firefox, and IPFS Desktop makes it easy to run a local node. Several organizations provide IPFS-based hosting services, while others operate public gateways that allow you to fetch data from IPFS through the browser without any special software.

These gateways act as entries to the P2P network and are important to bootstrap adoption. Through some simple DNS magic, a domain can be configured so that a user’s access request will result in the corresponding content being retrieved and served by a gateway, in a way that is completely transparent to the user.

So far, IPFS has been used to build varied applications, including systems for e-commerce, secure distribution of scientific data sets, mirroring Wikipedia, creating new social networks, sharing cancer data, blockchain creation, secure and encrypted personal-file storage and sharing, developertools, and data analytics.

You may have used this network already: If you’ve ever visited the Protocol Labs site ( Protocol.ai), you’ve retrieved pages of a website from IPFS without even realizing it!